diff options

Diffstat (limited to 'docs/dev/architecture.md')

| -rw-r--r-- | docs/dev/architecture.md | 472 |

1 files changed, 359 insertions, 113 deletions

diff --git a/docs/dev/architecture.md b/docs/dev/architecture.md index b5831f47c..56ebaa3df 100644 --- a/docs/dev/architecture.md +++ b/docs/dev/architecture.md | |||

| @@ -1,174 +1,420 @@ | |||

| 1 | # Architecture | 1 | # Architecture |

| 2 | 2 | ||

| 3 | This document describes the high-level architecture of rust-analyzer. | 3 | This document describes the high-level architecture of rust-analyzer. |

| 4 | If you want to familiarize yourself with the code base, you are just | 4 | If you want to familiarize yourself with the code base, you are just in the right place! |

| 5 | in the right place! | ||

| 6 | 5 | ||

| 7 | See also the [guide](./guide.md), which walks through a particular snapshot of | 6 | See also the [guide](./guide.md), which walks through a particular snapshot of rust-analyzer code base. |

| 8 | rust-analyzer code base. | ||

| 9 | 7 | ||

| 10 | Yet another resource is this playlist with videos about various parts of the | 8 | Yet another resource is this playlist with videos about various parts of the analyzer: |

| 11 | analyzer: | ||

| 12 | 9 | ||

| 13 | https://www.youtube.com/playlist?list=PL85XCvVPmGQho7MZkdW-wtPtuJcFpzycE | 10 | https://www.youtube.com/playlist?list=PL85XCvVPmGQho7MZkdW-wtPtuJcFpzycE |

| 14 | 11 | ||

| 15 | Note that the guide and videos are pretty dated, this document should be in | 12 | Note that the guide and videos are pretty dated, this document should be, in general, fresher. |

| 16 | generally fresher. | ||

| 17 | 13 | ||

| 18 | ## The Big Picture | 14 | See also these implementation-related blog posts: |

| 15 | |||

| 16 | * https://rust-analyzer.github.io/blog/2019/11/13/find-usages.html | ||

| 17 | * https://rust-analyzer.github.io/blog/2020/07/20/three-architectures-for-responsive-ide.html | ||

| 18 | * https://rust-analyzer.github.io/blog/2020/09/16/challeging-LR-parsing.html | ||

| 19 | * https://rust-analyzer.github.io/blog/2020/09/28/how-to-make-a-light-bulb.html | ||

| 20 | * https://rust-analyzer.github.io/blog/2020/10/24/introducing-ungrammar.html | ||

| 21 | |||

| 22 | ## Bird's Eye View | ||

| 19 | 23 | ||

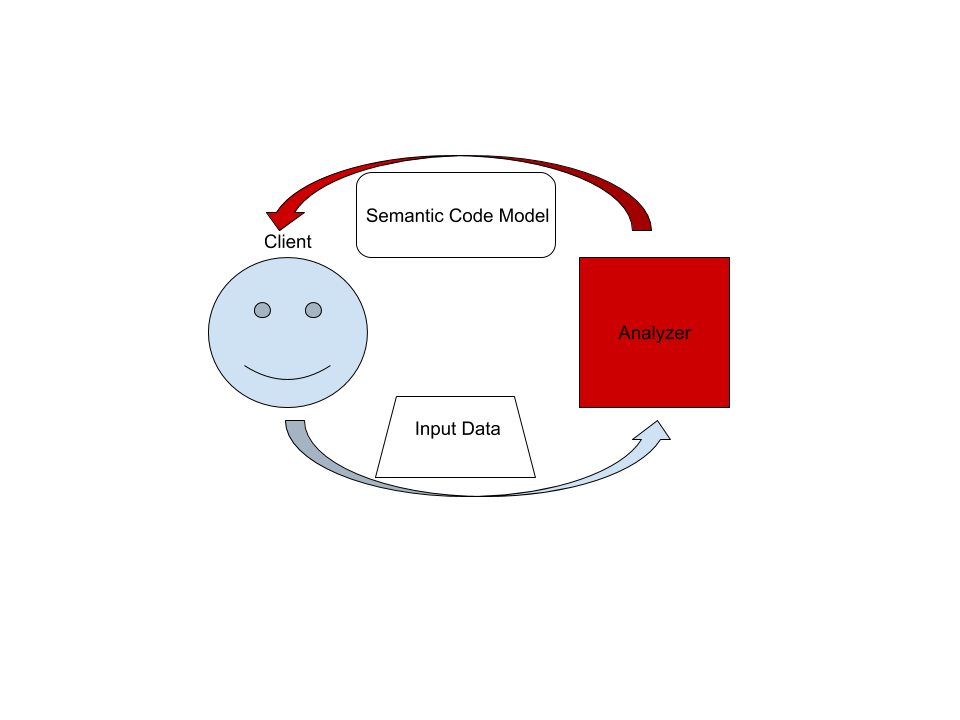

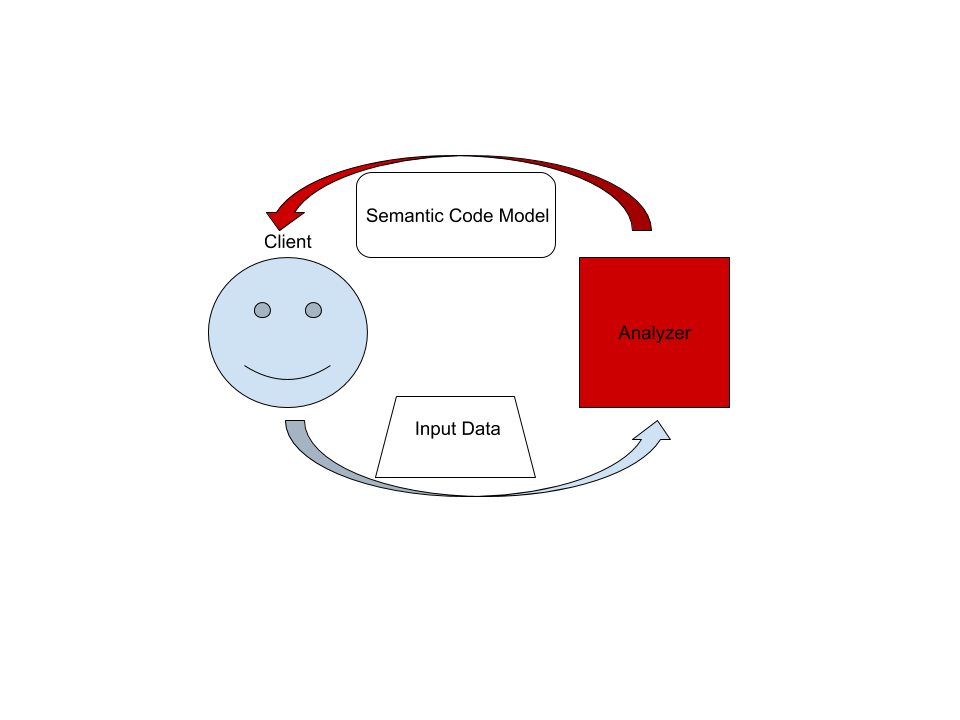

| 20 |  | 24 |  |

| 21 | 25 | ||

| 22 | On the highest level, rust-analyzer is a thing which accepts input source code | 26 | On the highest level, rust-analyzer is a thing which accepts input source code from the client and produces a structured semantic model of the code. |

| 23 | from the client and produces a structured semantic model of the code. | 27 | |

| 28 | More specifically, input data consists of a set of test files (`(PathBuf, String)` pairs) and information about project structure, captured in the so called `CrateGraph`. | ||

| 29 | The crate graph specifies which files are crate roots, which cfg flags are specified for each crate and what dependencies exist between the crates. | ||

| 30 | This is the input (ground) state. | ||

| 31 | The analyzer keeps all this input data in memory and never does any IO. | ||

| 32 | Because the input data is source code, which typically measures in tens of megabytes at most, keeping everything in memory is OK. | ||

| 33 | |||

| 34 | A "structured semantic model" is basically an object-oriented representation of modules, functions and types which appear in the source code. | ||

| 35 | This representation is fully "resolved": all expressions have types, all references are bound to declarations, etc. | ||

| 36 | This is derived state. | ||

| 37 | |||

| 38 | The client can submit a small delta of input data (typically, a change to a single file) and get a fresh code model which accounts for changes. | ||

| 39 | |||

| 40 | The underlying engine makes sure that model is computed lazily (on-demand) and can be quickly updated for small modifications. | ||

| 24 | 41 | ||

| 25 | More specifically, input data consists of a set of test files (`(PathBuf, | ||

| 26 | String)` pairs) and information about project structure, captured in the so | ||

| 27 | called `CrateGraph`. The crate graph specifies which files are crate roots, | ||

| 28 | which cfg flags are specified for each crate and what dependencies exist between | ||

| 29 | the crates. The analyzer keeps all this input data in memory and never does any | ||

| 30 | IO. Because the input data are source code, which typically measures in tens of | ||

| 31 | megabytes at most, keeping everything in memory is OK. | ||

| 32 | 42 | ||

| 33 | A "structured semantic model" is basically an object-oriented representation of | 43 | ## Code Map |

| 34 | modules, functions and types which appear in the source code. This representation | ||

| 35 | is fully "resolved": all expressions have types, all references are bound to | ||

| 36 | declarations, etc. | ||

| 37 | 44 | ||

| 38 | The client can submit a small delta of input data (typically, a change to a | 45 | This section talks briefly about various important directories and data structures. |

| 39 | single file) and get a fresh code model which accounts for changes. | 46 | Pay attention to the **Architecture Invariant** sections. |

| 47 | They often talk about things which are deliberately absent in the source code. | ||

| 40 | 48 | ||

| 41 | The underlying engine makes sure that model is computed lazily (on-demand) and | 49 | Note also which crates are **API Boundaries**. |

| 42 | can be quickly updated for small modifications. | 50 | Remember, [rules at the boundary are different](https://www.tedinski.com/2018/02/06/system-boundaries.html). |

| 43 | 51 | ||

| 52 | ### `xtask` | ||

| 44 | 53 | ||

| 45 | ## Code generation | 54 | This is rust-analyzer's "build system". |

| 55 | We use cargo to compile rust code, but there are also various other tasks, like release management or local installation. | ||

| 56 | They are handled by Rust code in the xtask directory. | ||

| 46 | 57 | ||

| 47 | Some of the components of this repository are generated through automatic | 58 | ### `editors/code` |

| 48 | processes. `cargo xtask codegen` runs all generation tasks. Generated code is | ||

| 49 | committed to the git repository. | ||

| 50 | 59 | ||

| 51 | In particular, `cargo xtask codegen` generates: | 60 | VS Code plugin. |

| 52 | 61 | ||

| 53 | 1. [`syntax_kind/generated`](https://github.com/rust-analyzer/rust-analyzer/blob/a0be39296d2925972cacd9fbf8b5fb258fad6947/crates/ra_parser/src/syntax_kind/generated.rs) | 62 | ### `libs/` |

| 54 | -- the set of terminals and non-terminals of rust grammar. | ||

| 55 | 63 | ||

| 56 | 2. [`ast/generated`](https://github.com/rust-analyzer/rust-analyzer/blob/a0be39296d2925972cacd9fbf8b5fb258fad6947/crates/ra_syntax/src/ast/generated.rs) | 64 | rust-analyzer independent libraries which we publish to crates.io. |

| 57 | -- AST data structure. | 65 | It's not heavily utilized at the moment. |

| 58 | 66 | ||

| 59 | 3. [`doc_tests/generated`](https://github.com/rust-analyzer/rust-analyzer/blob/a0be39296d2925972cacd9fbf8b5fb258fad6947/crates/assists/src/doc_tests/generated.rs), | 67 | ### `crates/parser` |

| 60 | [`test_data/parser/inline`](https://github.com/rust-analyzer/rust-analyzer/tree/a0be39296d2925972cacd9fbf8b5fb258fad6947/crates/ra_syntax/test_data/parser/inline) | ||

| 61 | -- tests for assists and the parser. | ||

| 62 | 68 | ||

| 63 | The source for 1 and 2 is in [`ast_src.rs`](https://github.com/rust-analyzer/rust-analyzer/blob/a0be39296d2925972cacd9fbf8b5fb258fad6947/xtask/src/ast_src.rs). | 69 | It is a hand-written recursive descent parser, which produces a sequence of events like "start node X", "finish node Y". |

| 70 | It works similarly to | ||

| 71 | [kotlin's parser](https://github.com/JetBrains/kotlin/blob/4d951de616b20feca92f3e9cc9679b2de9e65195/compiler/frontend/src/org/jetbrains/kotlin/parsing/KotlinParsing.java), | ||

| 72 | which is a good source of inspiration for dealing with syntax errors and incomplete input. | ||

| 73 | Original [libsyntax parser](https://github.com/rust-lang/rust/blob/6b99adeb11313197f409b4f7c4083c2ceca8a4fe/src/libsyntax/parse/parser.rs) is what we use for the definition of the Rust language. | ||

| 74 | `TreeSink` and `TokenSource` traits bridge the tree-agnostic parser from `grammar` with `rowan` trees. | ||

| 64 | 75 | ||

| 65 | ## Code Walk-Through | 76 | **Architecture Invariant:** the parser is independent of the particular tree structure and particular representation of the tokens. |

| 77 | It transforms one flat stream of events into another flat stream of events. | ||

| 78 | Token independence allows us to pares out both text-based source code and `tt`-based macro input. | ||

| 79 | Tree independence allows us to more easily vary the syntax tree implementation. | ||

| 80 | It should also unlock efficient light-parsing approaches. | ||

| 81 | For example, you can extract the set of names defined in a file (for typo correction) without building a syntax tree. | ||

| 66 | 82 | ||

| 67 | ### `crates/ra_syntax`, `crates/parser` | 83 | **Architecture Invariant:** parsing never fails, the parser produces `(T, Vec<Error>)` rather than `Result<T, Error>`. |

| 68 | 84 | ||

| 69 | Rust syntax tree structure and parser. See | 85 | ### `crates/syntax` |

| 70 | [RFC](https://github.com/rust-lang/rfcs/pull/2256) and [./syntax.md](./syntax.md) for some design notes. | 86 | |

| 87 | Rust syntax tree structure and parser. | ||

| 88 | See [RFC](https://github.com/rust-lang/rfcs/pull/2256) and [./syntax.md](./syntax.md) for some design notes. | ||

| 71 | 89 | ||

| 72 | - [rowan](https://github.com/rust-analyzer/rowan) library is used for constructing syntax trees. | 90 | - [rowan](https://github.com/rust-analyzer/rowan) library is used for constructing syntax trees. |

| 73 | - `grammar` module is the actual parser. It is a hand-written recursive descent parser, which | ||

| 74 | produces a sequence of events like "start node X", "finish node Y". It works similarly to [kotlin's parser](https://github.com/JetBrains/kotlin/blob/4d951de616b20feca92f3e9cc9679b2de9e65195/compiler/frontend/src/org/jetbrains/kotlin/parsing/KotlinParsing.java), | ||

| 75 | which is a good source of inspiration for dealing with syntax errors and incomplete input. Original [libsyntax parser](https://github.com/rust-lang/rust/blob/6b99adeb11313197f409b4f7c4083c2ceca8a4fe/src/libsyntax/parse/parser.rs) | ||

| 76 | is what we use for the definition of the Rust language. | ||

| 77 | - `TreeSink` and `TokenSource` traits bridge the tree-agnostic parser from `grammar` with `rowan` trees. | ||

| 78 | - `ast` provides a type safe API on top of the raw `rowan` tree. | 91 | - `ast` provides a type safe API on top of the raw `rowan` tree. |

| 79 | - `ast_src` description of the grammar, which is used to generate `syntax_kinds` | 92 | - `ungrammar` description of the grammar, which is used to generate `syntax_kinds` and `ast` modules, using `cargo xtask codegen` command. |

| 80 | and `ast` modules, using `cargo xtask codegen` command. | 93 | |

| 94 | Tests for ra_syntax are mostly data-driven. | ||

| 95 | `test_data/parser` contains subdirectories with a bunch of `.rs` (test vectors) and `.txt` files with corresponding syntax trees. | ||

| 96 | During testing, we check `.rs` against `.txt`. | ||

| 97 | If the `.txt` file is missing, it is created (this is how you update tests). | ||

| 98 | Additionally, running `cargo xtask codegen` will walk the grammar module and collect all `// test test_name` comments into files inside `test_data/parser/inline` directory. | ||

| 99 | |||

| 100 | To update test data, run with `UPDATE_EXPECT` variable: | ||

| 81 | 101 | ||

| 82 | Tests for ra_syntax are mostly data-driven: `test_data/parser` contains subdirectories with a bunch of `.rs` | 102 | ```bash |

| 83 | (test vectors) and `.txt` files with corresponding syntax trees. During testing, we check | 103 | env UPDATE_EXPECT=1 cargo qt |

| 84 | `.rs` against `.txt`. If the `.txt` file is missing, it is created (this is how you update | 104 | ``` |

| 85 | tests). Additionally, running `cargo xtask codegen` will walk the grammar module and collect | ||

| 86 | all `// test test_name` comments into files inside `test_data/parser/inline` directory. | ||

| 87 | 105 | ||

| 88 | Note | 106 | After adding a new inline test you need to run `cargo xtest codegen` and also update the test data as described above. |

| 89 | [`api_walkthrough`](https://github.com/rust-analyzer/rust-analyzer/blob/2fb6af89eb794f775de60b82afe56b6f986c2a40/crates/ra_syntax/src/lib.rs#L190-L348) | 107 | |

| 108 | Note [`api_walkthrough`](https://github.com/rust-analyzer/rust-analyzer/blob/2fb6af89eb794f775de60b82afe56b6f986c2a40/crates/ra_syntax/src/lib.rs#L190-L348) | ||

| 90 | in particular: it shows off various methods of working with syntax tree. | 109 | in particular: it shows off various methods of working with syntax tree. |

| 91 | 110 | ||

| 92 | See [#93](https://github.com/rust-analyzer/rust-analyzer/pull/93) for an example PR which | 111 | See [#93](https://github.com/rust-analyzer/rust-analyzer/pull/93) for an example PR which fixes a bug in the grammar. |

| 93 | fixes a bug in the grammar. | 112 | |

| 113 | **Architecture Invariant:** `syntax` crate is completely independent from the rest of rust-analyzer. It knows nothing about salsa or LSP. | ||

| 114 | This is important because it is possible to make useful tooling using only the syntax tree. | ||

| 115 | Without semantic information, you don't need to be able to _build_ code, which makes the tooling more robust. | ||

| 116 | See also https://web.stanford.edu/~mlfbrown/paper.pdf. | ||

| 117 | You can view the `syntax` crate as an entry point to rust-analyzer. | ||

| 118 | `syntax` crate is an **API Boundary**. | ||

| 119 | |||

| 120 | **Architecture Invariant:** syntax tree is a value type. | ||

| 121 | The tree is fully determined by the contents of its syntax nodes, it doesn't need global context (like an interner) and doesn't store semantic info. | ||

| 122 | Using the tree as a store for semantic info is convenient in traditional compilers, but doesn't work nicely in the IDE. | ||

| 123 | Specifically, assists and refactors require transforming syntax trees, and that becomes awkward if you need to do something with the semantic info. | ||

| 124 | |||

| 125 | **Architecture Invariant:** syntax tree is built for a single file. | ||

| 126 | This is to enable parallel parsing of all files. | ||

| 127 | |||

| 128 | **Architecture Invariant:** Syntax trees are by design incomplete and do not enforce well-formedness. | ||

| 129 | If an AST method returns an `Option`, it *can* be `None` at runtime, even if this is forbidden by the grammar. | ||

| 94 | 130 | ||

| 95 | ### `crates/base_db` | 131 | ### `crates/base_db` |

| 96 | 132 | ||

| 97 | We use the [salsa](https://github.com/salsa-rs/salsa) crate for incremental and | 133 | We use the [salsa](https://github.com/salsa-rs/salsa) crate for incremental and on-demand computation. |

| 98 | on-demand computation. Roughly, you can think of salsa as a key-value store, but | 134 | Roughly, you can think of salsa as a key-value store, but it can also compute derived values using specified functions. The `base_db` crate provides basic infrastructure for interacting with salsa. |

| 99 | it also can compute derived values using specified functions. The `base_db` crate | 135 | Crucially, it defines most of the "input" queries: facts supplied by the client of the analyzer. |

| 100 | provides basic infrastructure for interacting with salsa. Crucially, it | 136 | Reading the docs of the `base_db::input` module should be useful: everything else is strictly derived from those inputs. |

| 101 | defines most of the "input" queries: facts supplied by the client of the | 137 | |

| 102 | analyzer. Reading the docs of the `base_db::input` module should be useful: | 138 | **Architecture Invariant:** particularities of the build system are *not* the part of the ground state. |

| 103 | everything else is strictly derived from those inputs. | 139 | In particular, `base_db` knows nothing about cargo. |

| 140 | The `CrateGraph` structure is used to represent the dependencies between the crates abstractly. | ||

| 141 | |||

| 142 | **Architecture Invariant:** `base_db` doesn't know about file system and file paths. | ||

| 143 | Files are represented with opaque `FileId`, there's no operation to get an `std::path::Path` out of the `FileId`. | ||

| 144 | |||

| 145 | ### `crates/hir_expand`, `crates/hir_def`, `crates/hir_ty` | ||

| 146 | |||

| 147 | These crates are the *brain* of rust-analyzer. | ||

| 148 | This is the compiler part of the IDE. | ||

| 149 | |||

| 150 | `hir_xxx` crates have a strong ECS flavor, in that they work with raw ids and directly query the database. | ||

| 151 | There's little abstraction here. | ||

| 152 | These crates integrate deeply with salsa and chalk. | ||

| 153 | |||

| 154 | Name resolution, macro expansion and type inference all happen here. | ||

| 155 | These crates also define various intermediate representations of the core. | ||

| 156 | |||

| 157 | `ItemTree` condenses a single `SyntaxTree` into a "summary" data structure, which is stable over modifications to function bodies. | ||

| 104 | 158 | ||

| 105 | ### `crates/hir*` crates | 159 | `DefMap` contains the module tree of a crate and stores module scopes. |

| 106 | 160 | ||

| 107 | HIR provides high-level "object oriented" access to Rust code. | 161 | `Body` stores information about expressions. |

| 108 | 162 | ||

| 109 | The principal difference between HIR and syntax trees is that HIR is bound to a | 163 | **Architecture Invariant:** these crates are not, and will never be, an api boundary. |

| 110 | particular crate instance. That is, it has cfg flags and features applied. So, | ||

| 111 | the relation between syntax and HIR is many-to-one. The `source_binder` module | ||

| 112 | is responsible for guessing a HIR for a particular source position. | ||

| 113 | 164 | ||

| 114 | Underneath, HIR works on top of salsa, using a `HirDatabase` trait. | 165 | **Architecture Invariant:** these crates explicitly care about being incremental. |

| 166 | The core invariant we maintain is "typing inside a function's body never invalidates global derived data". | ||

| 167 | i.e., if you change the body of `foo`, all facts about `bar` should remain intact. | ||

| 115 | 168 | ||

| 116 | `hir_xxx` crates have a strong ECS flavor, in that they work with raw ids and | 169 | **Architecture Invariant:** hir exists only in context of particular crate instance with specific CFG flags. |

| 117 | directly query the database. | 170 | The same syntax may produce several instances of HIR if the crate participates in the crate graph more than once. |

| 118 | 171 | ||

| 119 | The top-level `hir` façade crate wraps ids into a more OO-flavored API. | 172 | ### `crates/hir` |

| 173 | |||

| 174 | The top-level `hir` crate is an **API Boundary**. | ||

| 175 | If you think about "using rust-analyzer as a library", `hir` crate is most likely the façade you'll be talking to. | ||

| 176 | |||

| 177 | It wraps ECS-style internal API into a more OO-flavored API (with an extra `db` argument for each call). | ||

| 178 | |||

| 179 | **Architecture Invariant:** `hir` provides a static, fully resolved view of the code. | ||

| 180 | While internal `hir_*` crates _compute_ things, `hir`, from the outside, looks like an inert data structure. | ||

| 181 | |||

| 182 | `hir` also handles the delicate task of going from syntax to the corresponding `hir`. | ||

| 183 | Remember that the mapping here is one-to-many. | ||

| 184 | See `Semantics` type and `source_to_def` module. | ||

| 185 | |||

| 186 | Note in particular a curious recursive structure in `source_to_def`. | ||

| 187 | We first resolve the parent _syntax_ node to the parent _hir_ element. | ||

| 188 | Then we ask the _hir_ parent what _syntax_ children does it have. | ||

| 189 | Then we look for our node in the set of children. | ||

| 190 | |||

| 191 | This is the heart of many IDE features, like goto definition, which start with figuring out the hir node at the cursor. | ||

| 192 | This is some kind of (yet unnamed) uber-IDE pattern, as it is present in Roslyn and Kotlin as well. | ||

| 120 | 193 | ||

| 121 | ### `crates/ide` | 194 | ### `crates/ide` |

| 122 | 195 | ||

| 123 | A stateful library for analyzing many Rust files as they change. `AnalysisHost` | 196 | The `ide` crate builds on top of `hir` semantic model to provide high-level IDE features like completion or goto definition. |

| 124 | is a mutable entity (clojure's atom) which holds the current state, incorporates | 197 | It is an **API Boundary**. |

| 125 | changes and hands out `Analysis` --- an immutable and consistent snapshot of | 198 | If you want to use IDE parts of rust-analyzer via LSP, custom flatbuffers-based protocol or just as a library in your text editor, this is the right API. |

| 126 | the world state at a point in time, which actually powers analysis. | 199 | |

| 200 | **Architecture Invariant:** `ide` crate's API is build out of POD types with public fields. | ||

| 201 | The API uses editor's terminology, it talks about offsets and string labels rather than in terms of definitions or types. | ||

| 202 | It is effectively the view in MVC and viewmodel in [MVVM](https://en.wikipedia.org/wiki/Model%E2%80%93view%E2%80%93viewmodel). | ||

| 203 | All arguments and return types are conceptually serializable. | ||

| 204 | In particular, syntax tress and and hir types are generally absent from the API (but are used heavily in the implementation). | ||

| 205 | Shout outs to LSP developers for popularizing the idea that "UI" is a good place to draw a boundary at. | ||

| 206 | |||

| 207 | `ide` is also the first crate which has the notion of change over time. | ||

| 208 | `AnalysisHost` is a state to which you can transactionally `apply_change`. | ||

| 209 | `Analysis` is an immutable snapshot of the state. | ||

| 127 | 210 | ||

| 128 | One interesting aspect of analysis is its support for cancellation. When a | 211 | Internally, `ide` is split across several crates. `ide_assists`, `ide_completion` and `ide_ssr` implement large isolated features. |

| 129 | change is applied to `AnalysisHost`, first all currently active snapshots are | 212 | `ide_db` implements common IDE functionality (notably, reference search is implemented here). |

| 130 | canceled. Only after all snapshots are dropped the change actually affects the | 213 | The `ide` contains a public API/façade, as well as implementation for a plethora of smaller features. |

| 131 | database. | ||

| 132 | 214 | ||

| 133 | APIs in this crate are IDE centric: they take text offsets as input and produce | 215 | **Architecture Invariant:** `ide` crate strives to provide a _perfect_ API. |

| 134 | offsets and strings as output. This works on top of rich code model powered by | 216 | Although at the moment it has only one consumer, the LSP server, LSP *does not* influence it's API design. |

| 135 | `hir`. | 217 | Instead, we keep in mind a hypothetical _ideal_ client -- an IDE tailored specifically for rust, every nook and cranny of which is packed with Rust-specific goodies. |

| 136 | 218 | ||

| 137 | ### `crates/rust-analyzer` | 219 | ### `crates/rust-analyzer` |

| 138 | 220 | ||

| 139 | An LSP implementation which wraps `ide` into a language server protocol. | 221 | This crate defines the `rust-analyzer` binary, so it is the **entry point**. |

| 222 | It implements the language server. | ||

| 223 | |||

| 224 | **Architecture Invariant:** `rust-analyzer` is the only crate that knows about LSP and JSON serialization. | ||

| 225 | If you want to expose a datastructure `X` from ide to LSP, don't make it serializable. | ||

| 226 | Instead, create a serializable counterpart in `rust-analyzer` crate and manually convert between the two. | ||

| 227 | |||

| 228 | `GlobalState` is the state of the server. | ||

| 229 | The `main_loop` defines the server event loop which accepts requests and sends responses. | ||

| 230 | Requests that modify the state or might block user's typing are handled on the main thread. | ||

| 231 | All other requests are processed in background. | ||

| 232 | |||

| 233 | **Architecture Invariant:** the server is stateless, a-la HTTP. | ||

| 234 | Sometimes state needs to be preserved between requests. | ||

| 235 | For example, "what is the `edit` for the fifth completion item of the last completion edit?". | ||

| 236 | For this, the second request should include enough info to re-create the context from scratch. | ||

| 237 | This generally means including all the parameters of the original request. | ||

| 238 | |||

| 239 | `reload` module contains the code that handles configuration and Cargo.toml changes. | ||

| 240 | This is a tricky business. | ||

| 241 | |||

| 242 | **Architecture Invariant:** `rust-analyzer` should be partially available even when the build is broken. | ||

| 243 | Reloading process should not prevent IDE features from working. | ||

| 244 | |||

| 245 | ### `crates/toolchain`, `crates/project_model`, `crates/flycheck` | ||

| 246 | |||

| 247 | These crates deal with invoking `cargo` to learn about project structure and get compiler errors for the "check on save" feature. | ||

| 248 | |||

| 249 | They use `crates/path` heavily instead of `std::path`. | ||

| 250 | A single `rust-analyzer` process can serve many projects, so it is important that server's current directory does not leak. | ||

| 251 | |||

| 252 | ### `crates/mbe`, `crates/tt`, `crates/proc_macro_api`, `crates/proc_macro_srv` | ||

| 253 | |||

| 254 | These crates implement macros as token tree -> token tree transforms. | ||

| 255 | They are independent from the rest of the code. | ||

| 256 | |||

| 257 | ### `crates/cfg` | ||

| 258 | |||

| 259 | This crate is responsible for parsing, evaluation and general definition of `cfg` attributes. | ||

| 140 | 260 | ||

| 141 | ### `crates/vfs` | 261 | ### `crates/vfs`, `crates/vfs-notify` |

| 142 | 262 | ||

| 143 | Although `hir` and `ide` don't do any IO, we need to be able to read | 263 | These crates implement a virtual file system. |

| 144 | files from disk at the end of the day. This is what `vfs` does. It also | 264 | They provide consistent snapshots of the underlying file system and insulate messy OS paths. |

| 145 | manages overlays: "dirty" files in the editor, whose "true" contents is | ||

| 146 | different from data on disk. | ||

| 147 | 265 | ||

| 148 | ## Testing Infrastructure | 266 | **Architecture Invariant:** vfs doesn't assume a single unified file system. |

| 267 | i.e., a single rust-analyzer process can act as a remote server for two different machines, where the same `/tmp/foo.rs` path points to different files. | ||

| 268 | For this reason, all path APIs generally take some existing path as a "file system witness". | ||

| 149 | 269 | ||

| 150 | Rust Analyzer has three interesting [systems | 270 | ### `crates/stdx` |

| 151 | boundaries](https://www.tedinski.com/2018/04/10/making-tests-a-positive-influence-on-design.html) | ||

| 152 | to concentrate tests on. | ||

| 153 | 271 | ||

| 154 | The outermost boundary is the `rust-analyzer` crate, which defines an LSP | 272 | This crate contains various non-rust-analyzer specific utils, which could have been in std, as well |

| 155 | interface in terms of stdio. We do integration testing of this component, by | 273 | as copies of unstable std items we would like to make use of already, like `std::str::split_once`. |

| 156 | feeding it with a stream of LSP requests and checking responses. These tests are | ||

| 157 | known as "heavy", because they interact with Cargo and read real files from | ||

| 158 | disk. For this reason, we try to avoid writing too many tests on this boundary: | ||

| 159 | in a statically typed language, it's hard to make an error in the protocol | ||

| 160 | itself if messages are themselves typed. | ||

| 161 | 274 | ||

| 162 | The middle, and most important, boundary is `ide`. Unlike | 275 | ### `crates/profile` |

| 163 | `rust-analyzer`, which exposes API, `ide` uses Rust API and is intended to | ||

| 164 | use by various tools. Typical test creates an `AnalysisHost`, calls some | ||

| 165 | `Analysis` functions and compares the results against expectation. | ||

| 166 | 276 | ||

| 167 | The innermost and most elaborate boundary is `hir`. It has a much richer | 277 | This crate contains utilities for CPU and memory profiling. |

| 168 | vocabulary of types than `ide`, but the basic testing setup is the same: we | 278 | |

| 169 | create a database, run some queries, assert result. | 279 | |

| 280 | ## Cross-Cutting Concerns | ||

| 281 | |||

| 282 | This sections talks about the things which are everywhere and nowhere in particular. | ||

| 283 | |||

| 284 | ### Code generation | ||

| 285 | |||

| 286 | Some of the components of this repository are generated through automatic processes. | ||

| 287 | `cargo xtask codegen` runs all generation tasks. | ||

| 288 | Generated code is generally committed to the git repository. | ||

| 289 | There are tests to check that the generated code is fresh. | ||

| 290 | |||

| 291 | In particular, we generate: | ||

| 292 | |||

| 293 | * API for working with syntax trees (`syntax::ast`, the [`ungrammar`](https://github.com/rust-analyzer/ungrammar) crate). | ||

| 294 | * Various sections of the manual: | ||

| 295 | |||

| 296 | * features | ||

| 297 | * assists | ||

| 298 | * config | ||

| 299 | |||

| 300 | * Documentation tests for assists | ||

| 301 | |||

| 302 | **Architecture Invariant:** we avoid bootstrapping. | ||

| 303 | For codegen we need to parse Rust code. | ||

| 304 | Using rust-analyzer for that would work and would be fun, but it would also complicate the build process a lot. | ||

| 305 | For that reason, we use syn and manual string parsing. | ||

| 306 | |||

| 307 | ### Cancellation | ||

| 308 | |||

| 309 | Let's say that the IDE is in the process of computing syntax highlighting, when the user types `foo`. | ||

| 310 | What should happen? | ||

| 311 | `rust-analyzer`s answer is that the highlighting process should be cancelled -- its results are now stale, and it also blocks modification of the inputs. | ||

| 312 | |||

| 313 | The salsa database maintains a global revision counter. | ||

| 314 | When applying a change, salsa bumps this counter and waits until all other threads using salsa finish. | ||

| 315 | If a thread does salsa-based computation and notices that the counter is incremented, it panics with a special value (see `Canceled::throw`). | ||

| 316 | That is, rust-analyzer requires unwinding. | ||

| 317 | |||

| 318 | `ide` is the boundary where the panic is caught and transformed into a `Result<T, Cancelled>`. | ||

| 319 | |||

| 320 | ### Testing | ||

| 321 | |||

| 322 | Rust Analyzer has three interesting [system boundaries](https://www.tedinski.com/2018/04/10/making-tests-a-positive-influence-on-design.html) to concentrate tests on. | ||

| 323 | |||

| 324 | The outermost boundary is the `rust-analyzer` crate, which defines an LSP interface in terms of stdio. | ||

| 325 | We do integration testing of this component, by feeding it with a stream of LSP requests and checking responses. | ||

| 326 | These tests are known as "heavy", because they interact with Cargo and read real files from disk. | ||

| 327 | For this reason, we try to avoid writing too many tests on this boundary: in a statically typed language, it's hard to make an error in the protocol itself if messages are themselves typed. | ||

| 328 | Heavy tests are only run when `RUN_SLOW_TESTS` env var is set. | ||

| 329 | |||

| 330 | The middle, and most important, boundary is `ide`. | ||

| 331 | Unlike `rust-analyzer`, which exposes API, `ide` uses Rust API and is intended for use by various tools. | ||

| 332 | A typical test creates an `AnalysisHost`, calls some `Analysis` functions and compares the results against expectation. | ||

| 333 | |||

| 334 | The innermost and most elaborate boundary is `hir`. | ||

| 335 | It has a much richer vocabulary of types than `ide`, but the basic testing setup is the same: we create a database, run some queries, assert result. | ||

| 170 | 336 | ||

| 171 | For comparisons, we use the `expect` crate for snapshot testing. | 337 | For comparisons, we use the `expect` crate for snapshot testing. |

| 172 | 338 | ||

| 173 | To test various analysis corner cases and avoid forgetting about old tests, we | 339 | To test various analysis corner cases and avoid forgetting about old tests, we use so-called marks. |

| 174 | use so-called marks. See the `marks` module in the `test_utils` crate for more. | 340 | See the `marks` module in the `test_utils` crate for more. |

| 341 | |||

| 342 | **Architecture Invariant:** rust-analyzer tests do not use libcore or libstd. | ||

| 343 | All required library code must be a part of the tests. | ||

| 344 | This ensures fast test execution. | ||

| 345 | |||

| 346 | **Architecture Invariant:** tests are data driven and do not test the API. | ||

| 347 | Tests which directly call various API functions are a liability, because they make refactoring the API significantly more complicated. | ||

| 348 | So most of the tests look like this: | ||

| 349 | |||

| 350 | ```rust | ||

| 351 | fn check(input: &str, expect: expect_test::Expect) { | ||

| 352 | // The single place that actually exercises a particular API | ||

| 353 | } | ||

| 354 | |||

| 355 | |||

| 356 | #[test] | ||

| 357 | fn foo() { | ||

| 358 | check("foo", expect![["bar"]]); | ||

| 359 | } | ||

| 360 | |||

| 361 | #[test] | ||

| 362 | fn spam() { | ||

| 363 | check("spam", expect![["eggs"]]); | ||

| 364 | } | ||

| 365 | // ...and a hundred more tests that don't care about the specific API at all. | ||

| 366 | ``` | ||

| 367 | |||

| 368 | To specify input data, we use a single string literal in a special format, which can describe a set of rust files. | ||

| 369 | See the `Fixture` type. | ||

| 370 | |||

| 371 | **Architecture Invariant:** all code invariants are tested by `#[test]` tests. | ||

| 372 | There's no additional checks in CI, formatting and tidy tests are run with `cargo test`. | ||

| 373 | |||

| 374 | **Architecture Invariant:** tests do not depend on any kind of external resources, they are perfectly reproducible. | ||

| 375 | |||

| 376 | ### Error Handling | ||

| 377 | |||

| 378 | **Architecture Invariant:** core parts of rust-analyzer (`ide`/`hir`) don't interact with the outside world and thus can't fail. | ||

| 379 | Only parts touching LSP are allowed to do IO. | ||

| 380 | |||

| 381 | Internals of rust-analyzer need to deal with broken code, but this is not an error condition. | ||

| 382 | rust-analyzer is robust: various analysis compute `(T, Vec<Error>)` rather than `Result<T, Error>`. | ||

| 383 | |||

| 384 | rust-analyzer is a complex long-running process. | ||

| 385 | It will always have bugs and panics. | ||

| 386 | But a panic in an isolated feature should not bring down the whole process. | ||

| 387 | Each LSP-request is protected by a `catch_unwind`. | ||

| 388 | We use `always` and `never` macros instead of `assert` to gracefully recover from impossible conditions. | ||

| 389 | |||

| 390 | ### Observability | ||

| 391 | |||

| 392 | rust-analyzer is a long-running process, so its important to understand what's going on inside. | ||

| 393 | We have several instruments for that. | ||

| 394 | |||

| 395 | The event loop that runs rust-analyzer is very explicit. | ||

| 396 | Rather than spawning futures or scheduling callbacks (open), the event loop accepts an `enum` of possible events (closed). | ||

| 397 | It's easy to see all the things that trigger rust-analyzer processing, together with their performance | ||

| 398 | |||

| 399 | rust-analyzer includes a simple hierarchical profiler (`hprof`). | ||

| 400 | It is enabled with `RA_PROFILE='*>50` env var (log all (`*`) actions which take more than `50` ms) and produces output like: | ||

| 401 | |||

| 402 | ``` | ||

| 403 | 85ms - handle_completion | ||

| 404 | 68ms - import_on_the_fly | ||

| 405 | 67ms - import_assets::search_for_relative_paths | ||

| 406 | 0ms - crate_def_map:wait (804 calls) | ||

| 407 | 0ms - find_path (16 calls) | ||

| 408 | 2ms - find_similar_imports (1 calls) | ||

| 409 | 0ms - generic_params_query (334 calls) | ||

| 410 | 59ms - trait_solve_query (186 calls) | ||

| 411 | 0ms - Semantics::analyze_impl (1 calls) | ||

| 412 | 1ms - render_resolution (8 calls) | ||

| 413 | 0ms - Semantics::analyze_impl (5 calls) | ||

| 414 | ``` | ||

| 415 | |||

| 416 | This is cheap enough to enable in production. | ||

| 417 | |||

| 418 | |||

| 419 | Similarly, we save live object counting (`RA_COUNT=1`). | ||

| 420 | It is not cheap enough to enable in prod, and this is a bug which should be fixed. | ||